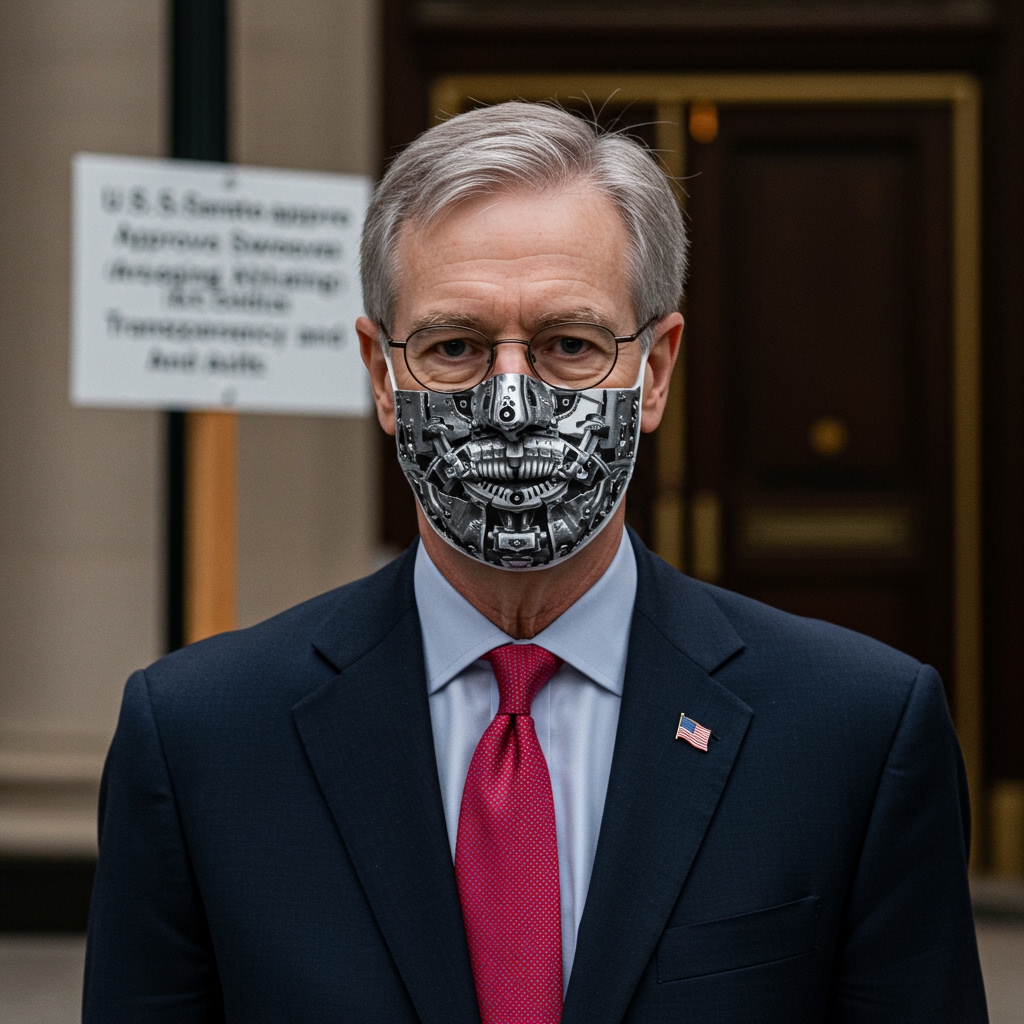

Senate Passes Landmark AI Bill

Washington, D.C. — The U.S. Senate today took a significant step towards establishing federal oversight of artificial intelligence technologies, passing the “Artificial Intelligence Transparency and Accountability Act of 2025”. The landmark legislation, aimed at addressing the potential risks and societal impacts of rapidly advancing AI systems, received bipartisan support, passing by a vote of 68-32.

The bill represents the most comprehensive effort yet by the U.S. Congress to regulate AI at a federal level. Its passage signals a growing consensus on Capitol Hill that while AI offers immense potential benefits, it also requires robust guardrails to ensure public safety, fairness, and accountability.

Establishing the Federal AI Commission

A cornerstone of the “Artificial Intelligence Transparency and Accountability Act of 2025” is the establishment of a new regulatory body, the Federal AI Commission (FAIC). This commission is envisioned as the primary federal entity tasked with overseeing the development, deployment, and impact of AI systems across various sectors of the economy and society. The FAIC will be responsible for developing specific regulations to implement the provisions of the act, including defining what constitutes a “high-risk” AI system and setting standards for transparency and audits.

The creation of a dedicated federal agency for AI regulation underscores the complexity and unique challenges posed by this technology, which cut across traditional regulatory boundaries. The FAIC will likely need to attract expertise spanning technology, law, ethics, and economics to effectively carry out its mandate.

Mandating Transparency: Data Disclosure Requirements

A key requirement of the newly passed bill is the mandate for developers of high-risk AI systems to disclose the sources of their training data. This provision is specifically targeted at AI used in critical sectors such as healthcare and finance, where erroneous or biased outputs can have severe consequences for individuals and society.

The requirement stems from concerns that AI systems trained on flawed, incomplete, or biased data sets can perpetuate or even amplify existing societal inequities. By compelling disclosure, the act aims to provide regulators, auditors, and potentially the public with insight into the foundations upon which these powerful systems are built, enabling better identification and mitigation of potential biases or limitations. Transparency in training data is viewed as a crucial step towards building trust in AI.

Ensuring Accountability Through Independent Audits

In addition to data disclosure, the “Artificial Intelligence Transparency and Accountability Act of 2025” mandates annual independent audits for high-risk AI systems. These audits are intended to rigorously evaluate the performance, safety, and fairness of AI applications used in critical domains.

The independent audit requirement is designed to provide an external check on the internal development processes of AI companies. Auditors will assess whether systems meet specified standards for accuracy, reliability, and non-discrimination. This accountability mechanism is particularly vital for complex, opaque AI models, often referred to as “black boxes,” where it can be difficult to understand why a particular decision or output was generated. The goal is to proactively identify and rectify issues like algorithmic bias before they lead to harmful outcomes.

Addressing High-Risk AI in Critical Sectors

The bill’s focus on high-risk AI systems used in critical sectors like healthcare and finance highlights areas where the potential for harm from unregulated AI is most significant. In healthcare, AI is increasingly used for diagnostics, treatment recommendations, and drug discovery; biases or errors could have life-threatening consequences. In finance, AI applications range from loan applications and credit scoring to fraud detection and algorithmic trading, where biased systems could lead to economic discrimination or market instability.

The specific inclusion of these sectors signals a legislative priority to first address AI risks in areas directly impacting fundamental human rights and economic stability. The FAIC, once established, will likely prioritize defining what constitutes “high-risk” within these and potentially other sectors, such as employment, housing, and criminal justice.

The Road to Enactment

Following its passage in the Senate, the “Artificial Intelligence Transparency and Accountability Act of 2025” now proceeds to the House of Representatives for consideration. The path through the House may involve further debate, potential amendments, and committee review before a final vote. Should the House pass its own version of the bill, differences would need to be reconciled in a conference committee before it could be sent to the President for signature into law.

While the Senate’s passage is a major legislative milestone, the bill’s final form and enactment are not yet guaranteed. However, the strong bipartisan vote in the Senate suggests a potential for significant progress in the House as well.

Impact on Tech Giants

The new requirements outlined in the act are expected to significantly impact major technology companies and AI developers, including industry giants like Google, Microsoft, and OpenAI. These companies are at the forefront of AI research and deployment and operate many of the systems likely to be classified as high-risk.

Compliance with the data disclosure and independent audit mandates will require substantial organizational effort, potentially involving changes to development pipelines, increased documentation, and engagement with external auditors. While some companies may already have internal processes for evaluating bias and transparency, federal mandates with regulatory oversight will establish binding legal requirements carrying potential penalties for non-compliance.

Broader Implications and Future of AI Regulation

The passage of the “Artificial Intelligence Transparency and Accountability Act of 2025” marks a pivotal moment in the development of AI regulation in the United States. It sets a precedent for federal intervention and lays the groundwork for a regulatory framework that can evolve alongside the technology.

The bill’s focus on transparency and accountability through data disclosure and audits aligns with principles being discussed globally. Its progress will be closely watched internationally as countries grapple with how to govern AI effectively. While the act addresses key areas of concern, it is widely anticipated that further legislation and regulatory action will be needed as AI continues to advance and its applications become more widespread and complex.

This legislative action underscores a growing recognition that responsible innovation in AI requires active governance to harness its benefits while mitigating its profound risks.