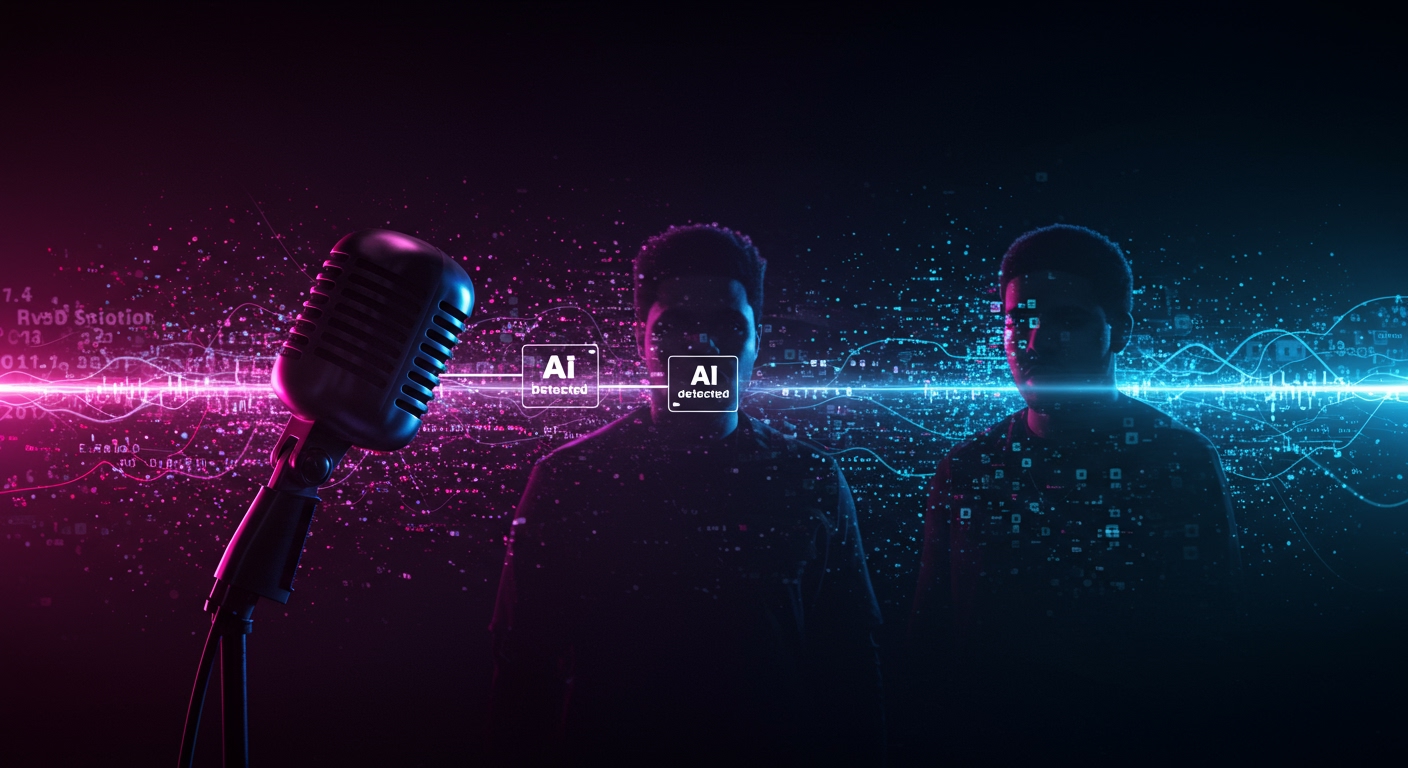

Major digital music platforms are set to implement new measures requiring the clear labeling of artificial intelligence (AI)-generated songs, a significant step prompted by the rapid proliferation of synthetic audio content and high-profile incidents that exposed the music industry’s vulnerabilities.

The Catalyst: A Viral Fake Duet

The impetus for this shift can be directly traced to events like the viral circulation in 2023 of a fake duet featuring artists Drake and The Weeknd. Titled “Heart on My Sleeve,” the AI-generated track mimicked the distinctive vocal styles of the two global superstars with startling accuracy. The song quickly amassed millions of streams across various digital music services before its synthetic origin was widely confirmed and the track was subsequently removed.

This incident served as a stark wake-up call for the music industry, highlighting not only the impressive capabilities of current AI technology but also the significant challenges it poses regarding intellectual property, artist rights, and content authenticity. The ease with which a deepfake track could achieve massive popularity underscored the urgent need for robust detection and identification mechanisms.

Industry’s Reactive Response

In the aftermath of “Heart on My Sleeve” and similar occurrences, the music industry, including major record labels and key technology platforms, has shifted its focus towards developing sophisticated tools and protocols to address the rise of synthetic audio. This involves building early detection systems designed to identify AI-generated content at various stages of its lifecycle.

This concerted effort signifies a move beyond simply reacting to viral fakes after they gain traction. Instead, the industry is attempting to integrate AI detection capabilities into the fundamental infrastructure of music distribution.

Building Tools for Identification

Music companies and tech platforms are now actively engaged in building tools specifically designed to identify synthetic content. This effort spans the entire lifecycle of a musical work, from its creation and training data through to its upload and distribution across digital platforms. The goal is to create a comprehensive system that can flag AI-generated tracks, whether they are intended to mimic existing artists or are entirely new creations using AI voices or compositional tools.

Identifying AI-generated music presents unique technical challenges. While some early AI tools leave discernible digital artifacts, newer and more advanced models are becoming increasingly difficult to distinguish from human-created content. This requires sophisticated machine learning models trained on vast datasets to differentiate between authentic human performances and synthetic reproductions.

The Mandate to Flag AI Content

The most tangible outcome of these developments is the decision by music platforms to implement policies that will flag AI-generated songs. This flagging system aims to provide transparency for listeners, rights holders, and the platforms themselves. The exact nature of the flag – whether it’s a simple label, a specific icon, or more detailed information – is still being determined and may vary across platforms.

The implementation of flagging is not merely a technical challenge but also involves significant policy considerations. Defining what constitutes “AI-generated” content can be complex, especially as artists begin to use AI tools as part of their creative process, rather than solely for creating synthetic versions of other artists.

Expert Perspective on Integration

Industry experts underscore the necessity of a deeply integrated approach. According to Matt Adell, cofounder of Musical AI, integrating these detection and identification tools into the core infrastructure of music platforms and industry systems is absolutely crucial to effectively manage the issue. Adell’s perspective highlights that fragmented or bolt-on solutions are unlikely to be sufficient against the rapidly evolving nature of AI technology.

Effective management requires a seamless flow of information and detection capabilities throughout the entire value chain, from the moment a track is uploaded by a distributor or artist to when it is served to a listener. This necessitates collaboration between tech companies, music distributors, rights organizations, and artists themselves.

Navigating the Future of Music and AI

The decision to flag AI-generated content represents a critical early step in the music industry’s long journey to adapt to the transformative power of artificial intelligence. While flagging offers a level of transparency, it does not resolve deeper issues surrounding compensation for artists whose voices or styles are used to train AI models, or the potential for dilution of the market with low-quality synthetic content.

The industry faces the ongoing challenge of balancing innovation and artistic expression with the need to protect creators’ rights and maintain the integrity of the music ecosystem. Regulatory bodies and legal frameworks are also likely to evolve in response to these technological advancements.

Ultimately, the move by music platforms to flag AI-generated songs is a necessary evolution in a digital landscape increasingly populated by synthetic media. It acknowledges the reality that AI is no longer a future concept but a present force shaping how music is created, distributed, and consumed, driven into the spotlight by viral events like the unauthorized Drake-Weeknd imitation that proved a watershed moment.