Global AI Governance Forum Proposes Sweeping Regulations for LLMs and Generative AI

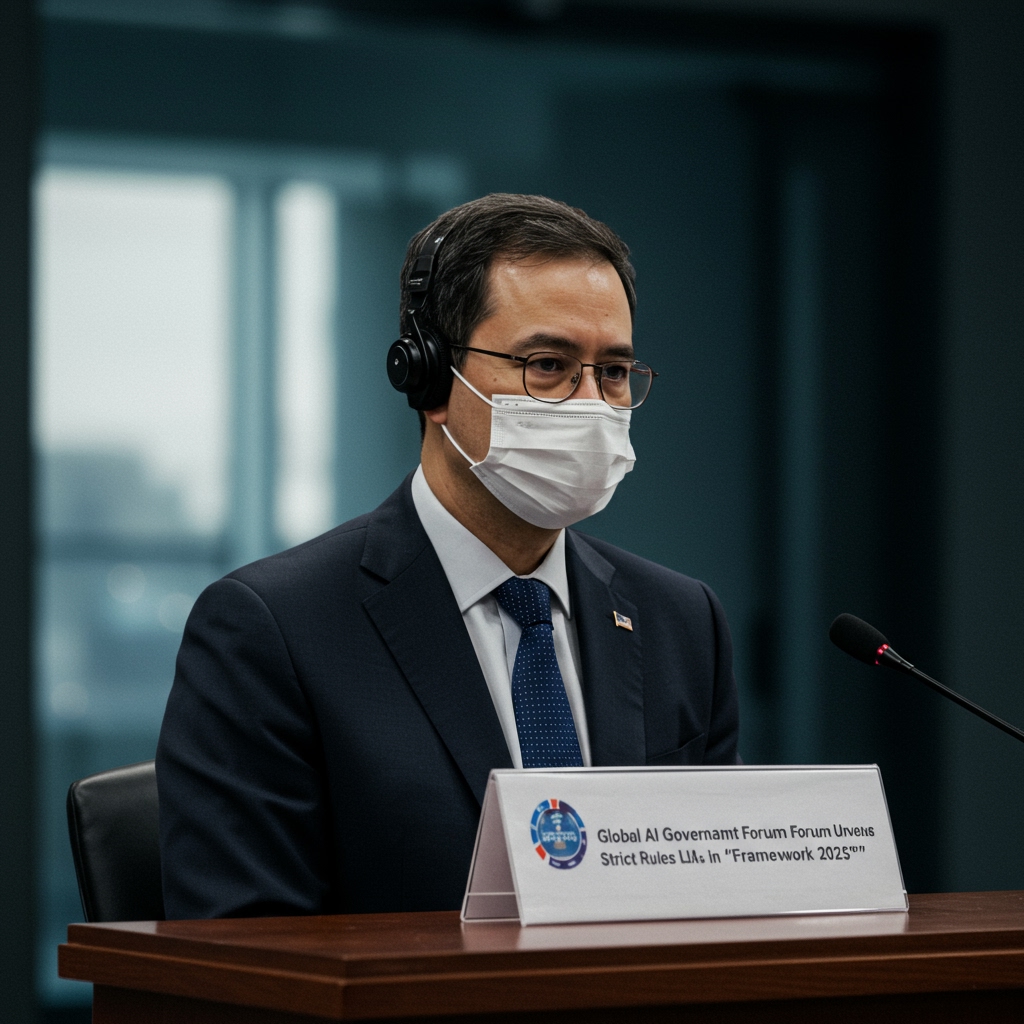

Brussels, Belgium – The Global AI Governance Forum (GAGEF), a leading international body dedicated to shaping the future of artificial intelligence regulation, took a significant step on February 14, 2025, by unveiling its proposed “Framework 2025 for Responsible AI Deployment.” This ambitious draft document sets forth a series of stringent new rules specifically targeting the developers and deployers of Large Language Models (LLMs) and other generative AI platforms. The proposal signals a growing global consensus on the urgent need for standardized guidelines to address the complex societal and ethical challenges posed by rapidly advancing AI technologies.

The core tenets of the Framework 2025 revolve around enhancing transparency, mitigating algorithmic bias, and ensuring the clear identification of AI-generated content. These pillars are designed to foster greater accountability within the AI industry and build public trust in AI systems, which are becoming increasingly integrated into daily life and critical infrastructure.

Key Provisions of Framework 2025

The proposed framework introduces several key mandates that, if implemented, would represent a substantial shift in how LLMs and generative AI are developed and deployed globally. These provisions aim to create a more predictable and responsible AI ecosystem.

Mandated Transparency in Training Data

One of the most impactful proposals within Framework 2025 is the requirement for increased transparency regarding the sources of data used to train LLMs. Under the proposed rules, developers would be mandated to provide detailed reporting on their training data sets. This could include disclosing the types of data used, the scale of the data, and potentially, information about the provenance and licensing of the data. The rationale behind this mandate is multifaceted: it aims to shed light on potential copyright issues, identify the origins of potential biases embedded in the data, and allow for greater scrutiny of the ethical implications of using certain datasets. Critics and proponents alike acknowledge that implementing this level of transparency could present significant technical and logistical challenges, given the massive scale and often complex curation processes involved in training cutting-edge LLMs.

Detailed Reporting on Bias Mitigation Efforts

The framework places a strong emphasis on addressing algorithmic bias, a pervasive issue in many AI systems that can perpetuate or even amplify societal inequalities. The proposed rules require developers and deployers to submit detailed reporting on their efforts to mitigate bias in their LLMs. This reporting could encompass methodologies for identifying bias, metrics used for evaluation, strategies employed to reduce or correct bias in training data or model outputs, and ongoing monitoring practices. The goal is to move beyond mere acknowledgement of bias and enforce concrete, documented efforts to build fairer AI systems. The specific requirements for what constitutes “detailed reporting” will likely be a key point of discussion during the public consultation phase, as the effectiveness and feasibility of various bias mitigation techniques continue to be areas of active research and debate within the AI community.

Content Watermarking Standards

Recognizing the growing concern over the proliferation of synthetic media and misinformation generated by advanced AI models, Framework 2025 proposes mandatory content watermarking standards for AI-generated output. This means that output from LLMs and generative AI platforms – whether text, images, audio, or video – would need to carry some form of identifiable mark, metadata, or embedded signal indicating its artificial origin. The purpose is to enable users and platforms to distinguish between human-created and AI-generated content, thereby combating deceptive uses of AI and enhancing media literacy. Developing a universal, robust, and tamper-proof watermarking standard that is effective across different modalities and platforms is a significant technical hurdle that the industry will need to address if this provision is adopted.

Implementation Timeline and Public Consultation

GAGEF has set forth a clear timeline for the progression of Framework 2025. The unveiling on February 14, 2025, marks the beginning of a crucial public consultation period. Stakeholders from industry, academia, civil society, and government are invited to provide feedback on the draft rules through May 2025. This consultative process is intended to gather diverse perspectives, refine the proposed mandates, and ensure the framework is both effective and implementable. Following the consultation, GAGEF aims for potential implementation phases to begin as early as Q4 2025. The forum’s objective is to ensure compliance with the final regulations across its member nations by the close of 2026, setting a relatively swift pace for the adoption of global AI governance standards.

Industry Reactions and Considerations

Initial reactions from the AI industry have been varied, reflecting the complex implications of the proposed regulations. Some industry leaders have expressed support for clearer, globally coordinated guidelines, arguing that a unified framework can reduce uncertainty and prevent a patchwork of conflicting national regulations. Such clarity is seen as beneficial for long-term investment and innovation. However, significant concerns have also been raised regarding the potential technical and financial burden associated with implementing the proposed rules. Mandating detailed transparency on training data, comprehensive bias reporting, and robust watermarking standards could require substantial investment in new processes, tools, and expertise. These concerns are particularly acute for smaller AI firms and startups, who may lack the resources to comply with potentially costly regulatory overhead compared to larger tech corporations. Ensuring the framework does not stifle innovation or disproportionately impact smaller players will be a critical consideration during the consultation period.

The Path Forward

The Global AI Governance Forum’s Framework 2025 represents a watershed moment in the international effort to govern advanced AI. By directly addressing the specific characteristics and risks of LLMs and generative AI, GAGEF is pushing for a proactive regulatory approach. The coming months, particularly the public consultation period through May 2025, will be critical in shaping the final form of these regulations. The ultimate success of Framework 2025 will depend on finding a delicate balance between fostering responsible AI development and deployment, mitigating risks, and enabling continued innovation across the global AI landscape. The proposed compliance deadline of 2026 for member nations underscores the urgency perceived by GAGEF in establishing a robust global governance structure for artificial intelligence.